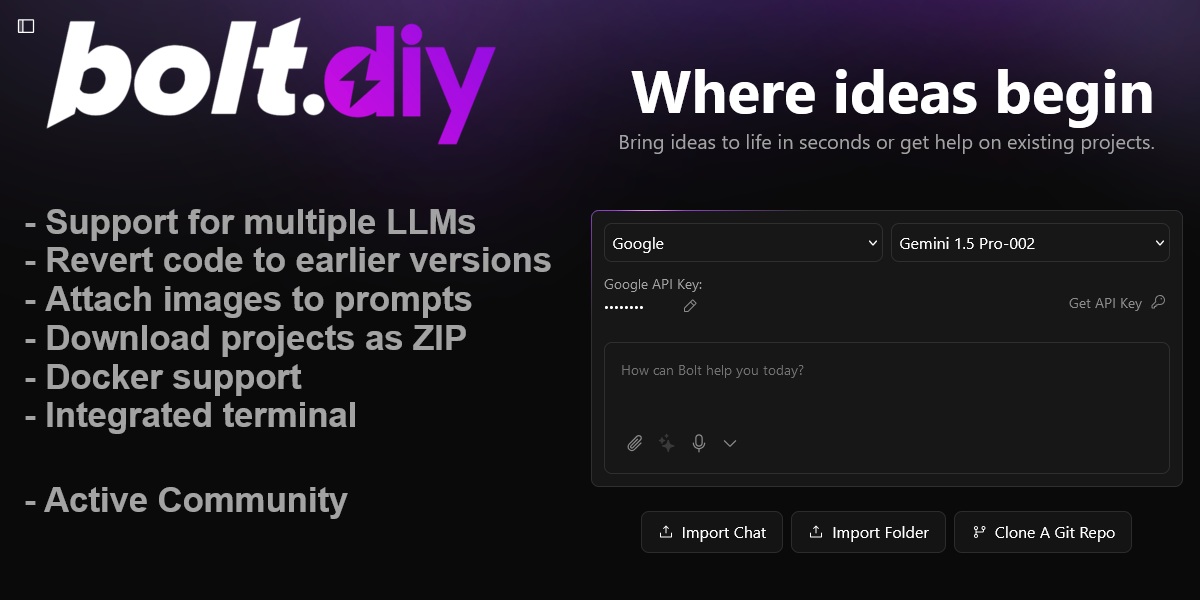

bolt.diy (Previously oTToDev)

Welcome to bolt.diy, the official open source version of Bolt.new (previously known as oTToDev and bolt.new ANY LLM), which allows you to choose the LLM that you use for each prompt! Currently, you can use OpenAI, Anthropic, Ollama, OpenRouter, Gemini, LMStudio, Mistral, xAI, HuggingFace, DeepSeek, or Groq models - and it is easily extended to use any other model supported by the Vercel AI SDK! See the instructions below for running this locally and extending it to include more models.

Check the bolt.diy Docs for more information.

Also this pinned post in our community has a bunch of incredible resources for running and deploying bolt.diy yourself!

We have also launched an experimental agent called the "bolt.diy Expert" that can answer common questions about bolt.diy. Find it here on the oTTomator Live Agent Studio.

bolt.diy was originally started by Cole Medin but has quickly grown into a massive community effort to build the BEST open source AI coding assistant!

Table of Contents

- Join the Community

- Requested Additions

- Features

- Setup

- Run the Application

- Available Scripts

- Contributing

- Roadmap

- FAQ

Join the community

Join the bolt.diy community here, in the oTTomator Think Tank!

Requested Additions

- OpenRouter Integration (@coleam00)

- Gemini Integration (@jonathands)

- Autogenerate Ollama models from what is downloaded (@yunatamos)

- Filter models by provider (@jasonm23)

- Download project as ZIP (@fabwaseem)

- Improvements to the main bolt.new prompt in

app\lib\.server\llm\prompts.ts(@kofi-bhr) - DeepSeek API Integration (@zenith110)

- Mistral API Integration (@ArulGandhi)

- "Open AI Like" API Integration (@ZerxZ)

- Ability to sync files (one way sync) to local folder (@muzafferkadir)

- Containerize the application with Docker for easy installation (@aaronbolton)

- Publish projects directly to GitHub (@goncaloalves)

- Ability to enter API keys in the UI (@ali00209)

- xAI Grok Beta Integration (@milutinke)

- LM Studio Integration (@karrot0)

- HuggingFace Integration (@ahsan3219)

- Bolt terminal to see the output of LLM run commands (@thecodacus)

- Streaming of code output (@thecodacus)

- Ability to revert code to earlier version (@wonderwhy-er)

- Cohere Integration (@hasanraiyan)

- Dynamic model max token length (@hasanraiyan)

- Better prompt enhancing (@SujalXplores)

- Prompt caching (@SujalXplores)

- Load local projects into the app (@wonderwhy-er)

- Together Integration (@mouimet-infinisoft)

- Mobile friendly (@qwikode)

- Better prompt enhancing (@SujalXplores)

- Attach images to prompts (@atrokhym)

- Added Git Clone button (@thecodacus)

- Git Import from url (@thecodacus)

- PromptLibrary to have different variations of prompts for different use cases (@thecodacus)

- Detect package.json and commands to auto install & run preview for folder and git import (@wonderwhy-er)

- Selection tool to target changes visually (@emcconnell)

- Detect terminal Errors and ask bolt to fix it (@thecodacus)

- Detect preview Errors and ask bolt to fix it (@wonderwhy-er)

- Add Starter Template Options (@thecodacus)

- Perplexity Integration (@meetpateltech)

- AWS Bedrock Integration (@kunjabijukchhe)

- HIGH PRIORITY - Prevent bolt from rewriting files as often (file locking and diffs)

- HIGH PRIORITY - Better prompting for smaller LLMs (code window sometimes doesn't start)

- HIGH PRIORITY - Run agents in the backend as opposed to a single model call

- Deploy directly to Vercel/Netlify/other similar platforms

- Have LLM plan the project in a MD file for better results/transparency

- VSCode Integration with git-like confirmations

- Upload documents for knowledge - UI design templates, a code base to reference coding style, etc.

- Voice prompting

- Azure Open AI API Integration

- Vertex AI Integration

Features

- AI-powered full-stack web development directly in your browser.

- Support for multiple LLMs with an extensible architecture to integrate additional models.

- Attach images to prompts for better contextual understanding.

- Integrated terminal to view output of LLM-run commands.

- Revert code to earlier versions for easier debugging and quicker changes.

- Download projects as ZIP for easy portability.

- Integration-ready Docker support for a hassle-free setup.

Setup

If you're new to installing software from GitHub, don't worry! If you encounter any issues, feel free to submit an "issue" using the provided links or improve this documentation by forking the repository, editing the instructions, and submitting a pull request. The following instruction will help you get the stable branch up and running on your local machine in no time.

Let's get you up and running with the stable version of Bolt.DIY!

Quick Download

- Next click source.zip

Prerequisites

Before you begin, you'll need to install two important pieces of software:

Install Node.js

Node.js is required to run the application.

- Visit the Node.js Download Page

- Download the "LTS" (Long Term Support) version for your operating system

- Run the installer, accepting the default settings

- Verify Node.js is properly installed:

- For Windows Users:

- Press

Windows + R - Type "sysdm.cpl" and press Enter

- Go to "Advanced" tab → "Environment Variables"

- Check if

Node.jsappears in the "Path" variable

- Press

- For Mac/Linux Users:

- Open Terminal

- Type this command:

echo $PATH - Look for

/usr/local/binin the output

- For Windows Users:

Running the Application

You have two options for running Bolt.DIY: directly on your machine or using Docker.

Option 1: Direct Installation (Recommended for Beginners)

Install Package Manager (pnpm):

npm install -g pnpmInstall Project Dependencies:

pnpm installStart the Application:

pnpm run devImportant Note: If you're using Google Chrome, you'll need Chrome Canary for local development. Download it here

Option 2: Using Docker

This option requires some familiarity with Docker but provides a more isolated environment.

Additional Prerequisite

- Install Docker: Download Docker

Steps:

Build the Docker Image:

# Using npm script: npm run dockerbuild # OR using direct Docker command: docker build . --target bolt-ai-developmentRun the Container:

docker-compose --profile development up

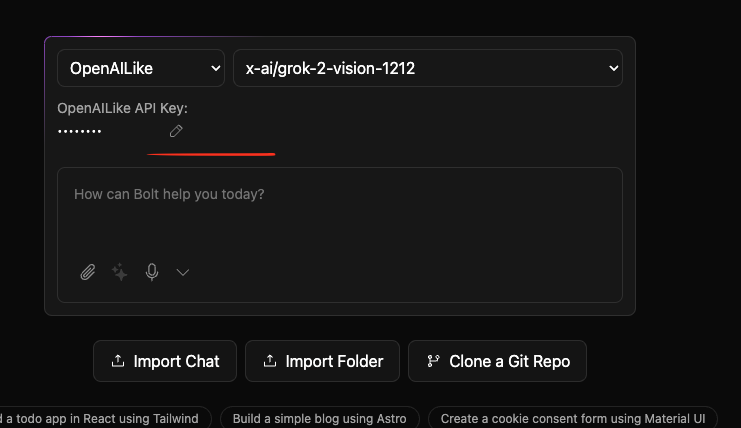

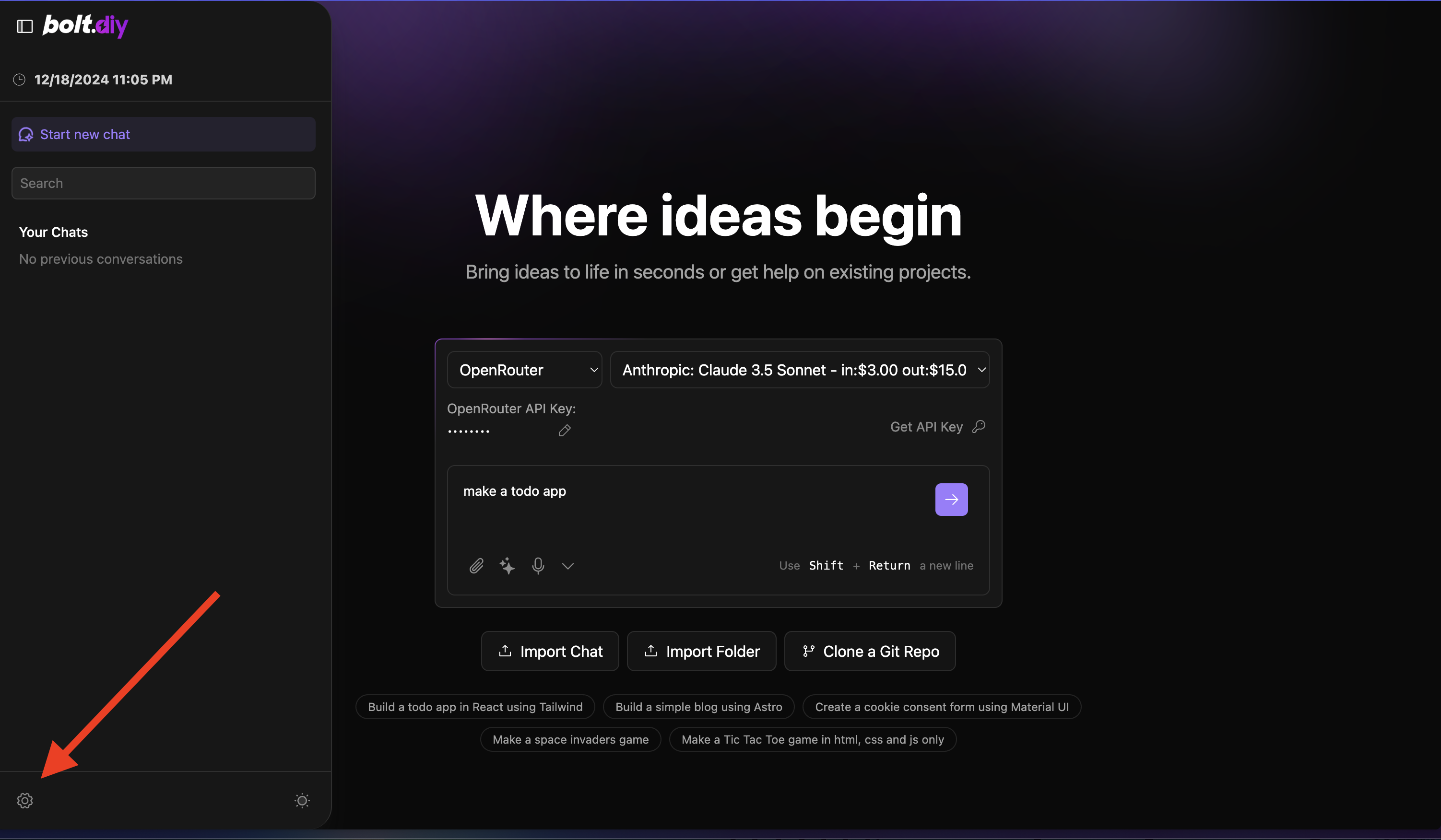

Configuring API Keys and Providers

Adding Your API Keys

Setting up your API keys in Bolt.DIY is straightforward:

- Open the home page (main interface)

- Select your desired provider from the dropdown menu

- Click the pencil (edit) icon

- Enter your API key in the secure input field

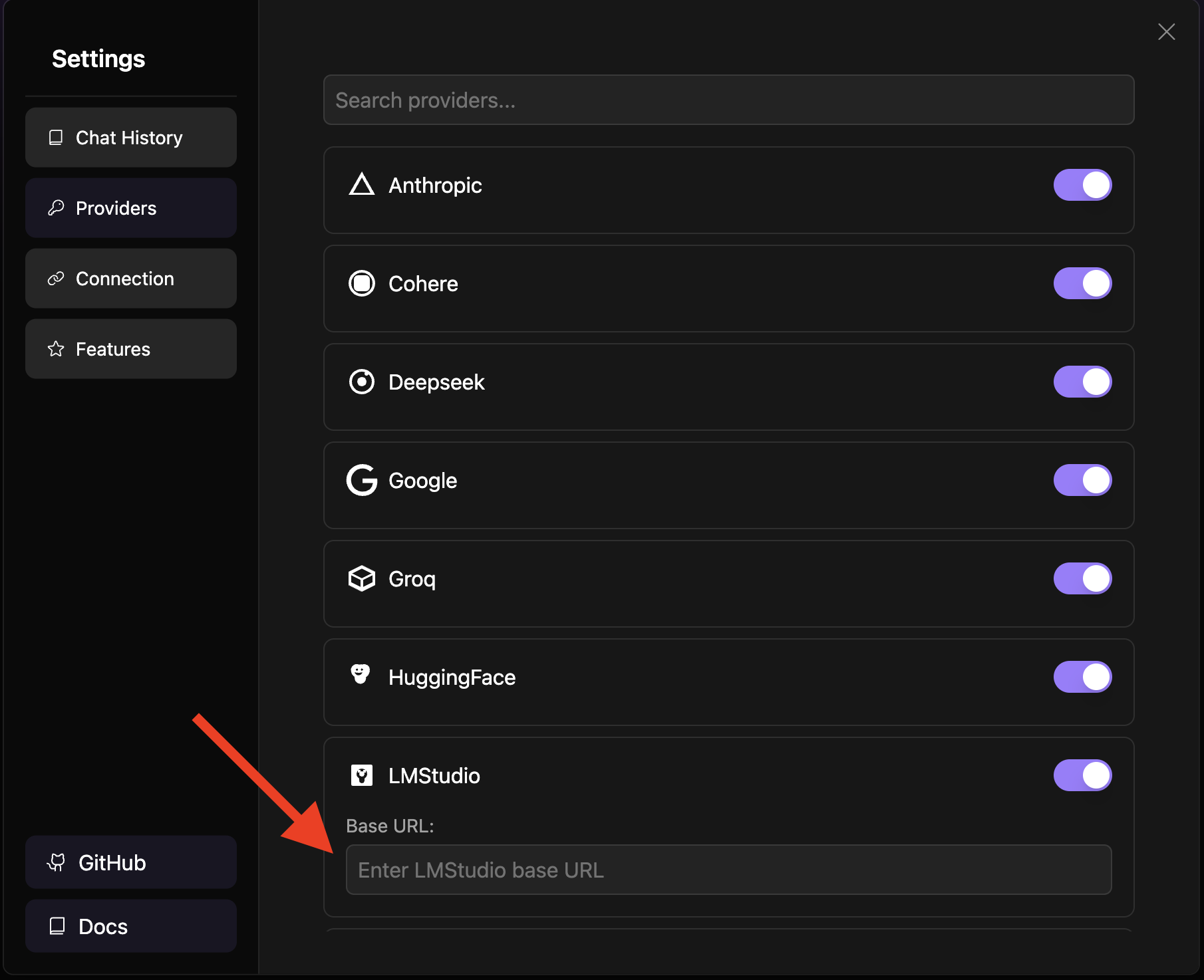

Configuring Custom Base URLs

For providers that support custom base URLs (such as Ollama or LM Studio), follow these steps:

Click the settings icon in the sidebar to open the settings menu

Navigate to the "Providers" tab

Search for your provider using the search bar

Note: Custom base URLs are particularly useful when running local instances of AI models or using custom API endpoints.

Supported Providers

- Ollama

- LM Studio

- OpenAILike

Setup Using Git (For Developers only)

This method is recommended for developers who want to:

- Contribute to the project

- Stay updated with the latest changes

- Switch between different versions

- Create custom modifications

Prerequisites

- Install Git: Download Git

Initial Setup

Clone the Repository:

# Using HTTPS git clone https://github.com/stackblitz-labs/bolt.diy.gitNavigate to Project Directory:

cd bolt.diySwitch to the Main Branch:

git checkout mainInstall Dependencies:

pnpm installStart the Development Server:

pnpm run dev

Staying Updated

To get the latest changes from the repository:

Save Your Local Changes (if any):

git stashPull Latest Updates:

git pull origin mainUpdate Dependencies:

pnpm installRestore Your Local Changes (if any):

git stash pop

Troubleshooting Git Setup

If you encounter issues:

Clean Installation:

# Remove node modules and lock files rm -rf node_modules pnpm-lock.yaml # Clear pnpm cache pnpm store prune # Reinstall dependencies pnpm installReset Local Changes:

# Discard all local changes git reset --hard origin/main

Remember to always commit your local changes or stash them before pulling updates to avoid conflicts.

Available Scripts

pnpm run dev: Starts the development server.pnpm run build: Builds the project.pnpm run start: Runs the built application locally using Wrangler Pages.pnpm run preview: Builds and runs the production build locally.pnpm test: Runs the test suite using Vitest.pnpm run typecheck: Runs TypeScript type checking.pnpm run typegen: Generates TypeScript types using Wrangler.pnpm run deploy: Deploys the project to Cloudflare Pages.pnpm run lint:fix: Automatically fixes linting issues.

Contributing

We welcome contributions! Check out our Contributing Guide to get started.

Roadmap

Explore upcoming features and priorities on our Roadmap.

FAQ

For answers to common questions, issues, and to see a list of recommended models, visit our FAQ Page.